Submit job script

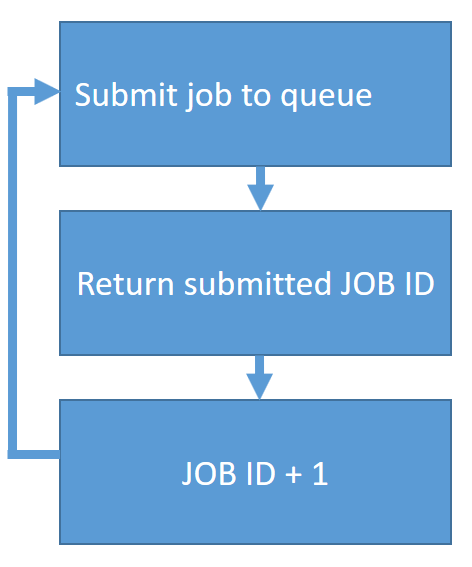

TMG Executive Menu submits contiguous jobs to the scheduler by calling the submit job script, which defines how the jobs are distributed in the cluster and the order in which they are executed.

The format of the submit job script depends on the scheduler you use. Each job corresponds to a group of solver modules that run consecutively. Some jobs require only one process, while others run in parallel.

The submit job script requires the following four arguments:

- The name of the job.

- The job ID the scheduler must execute before the next job is sent to the queue. The value is

-1if there are no more jobs in the queue. It defines the ordering of the jobs by ensuring that the scheduler completes the first job, even when resources are available to launch the next one.

- The number of nodes required for the job. The submit job script retrieves this value from the solution monitor.

- The command to run the job.

SGE scheduler submit script example

This file called, for example script_parallel.sh, is a submit script that submits a job to the SGE scheduler from the TMG Executive Menu. Its content is:

#!/bin/bash

#

# * $1 is the job name, visible in the queue

# * $2 is the ID of a job that needs to run before this one. -1 if none.

# * $3 is the number of CPUs for the job

# * $4 is the command to run (with its arguments quote protected)

#

qsub_output=`qsub -hold_jid $2 -pe monitor $3 -N $1 /shared_nfs/scripts/run_parallel_slave.sh "$4"`

job_number=`echo $qsub_output | awk '{for(i=1;i<=NF;i++){ if(strtonum($i)>max){ max = strtonum($i)} }; print max}'`

echo $job_number

where:

qsub_outputis the output log file name of the submitted job in the scheduler queue.qsub-hold_jiddefines the job dependency list of the submitted job. The submitted job is only eligible for execution when the job ID that is identified in the$2parameter is completed.-pe monitor $3setsmonitoras the name of the parallel environment and the$3parameter identifies the number of nodes that are required for the job.-N - $1defines the job name set using the value set with the$1parameter./shared_nfs/scripts/run_parallel_slave.shis the location of the script that prepares the cluster for the DMP processing using the SGE scheduler. You can store this file in any directory providing the file is accessible from all nodes in the cluster."$4"` job_number=`echo $qsub_output | awk '{for(i=1;i<=NF;i++){ if(strtonum($i)>max){ max = strtonum($i)} }; print max}searches for the submitted job number in the qsub_output log file and prints the next job ID value as the job number.job_numberis the job number that the TMG Executive Menu retrieves from the output log file.

The run-time script, run_parallel_slave.sh, sets up the required configurations on the cluster nodes before the job ($4) is started. This script is executed by the master node of the cluster when the job is launched. It prepares the master node for the parallel processing and executes the job identified in the $1 parameter. Depending on the type of the scheduler you use, this job script may not be required. The alternative to this script is to submit the job directly to the queue.

#!/bin/bash

#

# * $1 is the job that is to be executed

#

# Specify the shell used for the job script (scheduler preamble commands)

#$ -S /bin/bash

#$ -V

# Place output files in the current working directory

#$ -cwd

# Merge standard error output with standard output

#$ -j y

#$ -o $JOB_NAME.sgelog

cd $SGE_O_WORKDIR

export TMP=/tmp

export TEMP=$TMP

# Actually run the job now...

$1LSF scheduler submit script example

This script called, for example script_parallel.sh submits a job to the LSF scheduler from the TMG Executive Menu.

#!/bin/bash

# * $1 is the job name, visible in the queue

# * $2 is the ID of a job that needs to run before this one. -1 if none.

# * $3 is the number of CPUs for the job

# * $4 is the command to run (with its arguments quote protected)

export LOG_FILE=/home/mydirectory/tmgrun

echo "Script_Parallel" >> $LOG_FILE

if [[ "$2" -eq "-1" ]] ;

then

echo "Inside if" >> $LOG_FILE

echo $2 >> $LOG_FILE

echo $4 >> $LOG_FILE

export qsub_output=$(bsub -q loc_dev_par -n 8 -G ug_dev_fdbundle -M 100 -app fdbundle -J $1 -P OTHER_IDN -R "rusage[mem=800]" $4 2>&1 )

else

echo "Inside-else" >> $LOG_FILE

echo $2 >> $LOG_FILE

echo $4 >> $LOG_FILE

if [[ "$3" -eq "1" ]] ;

then

export qsub_output=$(bsub -w "done($2)" -q loc_dev_par -n 12 -G ug_dev_fdbundle -M 100 -app fdbundle -J $1 -P OTHER_IDN -R "rusage[mem=800]" $4 2>&1 )

else

export qsub_output=$(bsub -w "done($2)" -q loc_dev_par -n $3 -G ug_dev_fdbundle -M 100 -app fdbundle -J $1 -P OTHER_IDN -R "rusage[mem=800]" $4 2>&1 )

fi

fi

echo "qsub_output=$qsub_output" >> $LOG_FILE

export job_number=`echo $qsub_output | awk -F '<' '{print $2}' | awk -F '>' '{print $1}'`

echo "printing job=$job_number" >> $LOG_FILE

echo $job_numberwhere:

LOG_FILEis the output file name of the script_parallel.sh.echodisplays the variables output into the LOG_FILE file.exportdefines the path and exports shell variables.if [[ "$2" -eq "-1" ]]checks for other jobs in the queue before running the current job.qsub_outputis the output log file name of the submitted job in the scheduler queue.bsubsubmits the job to the LSF scheduler by running the specified commands.-q loc_dev_parspecifies the target queue. In this example, the target queue isloc_dev_par.-n 8(or$3) specifies the number of required nodes for the job.-G ug_dev_fdbundlespecifies your user group. In this example, the user group isug_dev_fdbundle.- (Optional)

-M 100specifies a memory limit for all the processes. In this example, the memory limit is 100 megabytes. -app fdbundlespecifies an existing application profile. In this example, the application profile isfdbundle.-J $1defines the job name using the value set with the$1parameter.- (Optional)

-P OTHER_IDNassigns the job to a specified project name. In this example, the project name isOTHER_IDN. - (Optional)

-R "rusage[mem=800]"specifies the required resource on a host and runs the job when it is available. In this example, 800 MB memory is requested to run the job. -w "done($2)"checks that the previous job is complete before running the current job.export job_number=`echo $qsub_output | awk -F '<' '{print $2}' | awk -F '>' '{print $1}'searches for the submitted job number in theqsub_output logfile and prints the next job ID and name as the job number.job_numberis the job number that the TMG Executive Menu retrieves from the LOG_FILE file.